Students consider how statistics and probability drive AI text generation, learning that language models can produce text that sounds credible but may not actually be credible.

Lesson Goals |

Students will be able to…

|

Student-facing Lesson Goals |

|

Materials |

|

Preparation |

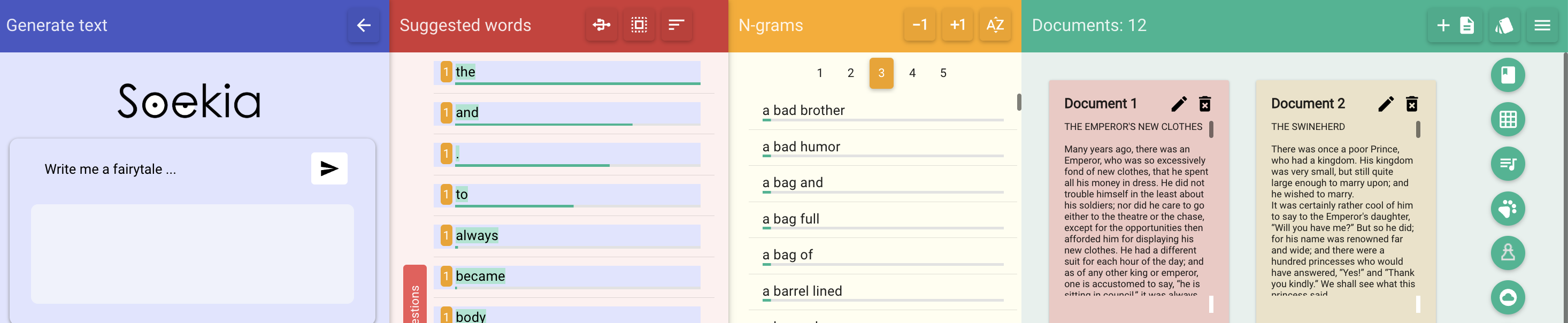

During this lesson, your students will interact with Soekia, a student-friendly statistical language model. We encourage you to spend plenty of time interacting with Soekia prior to the lesson. It is a rich learning tool with a lot of interesting features! Decide if you want to distribute the optional handout Famous AI Disasters. If so, print copies for your students. |

🔗Meet Soekia

Overview

Students engage with Soekia to discover some of the nuances of a student-friendly statistical language model.

Launch

You’ve already learned about how statistical language models rely on probability to generate text and constructed your own language model from the folk song "There Was an Old Lady Who Swallowed a Fly" in our Statistical Language Modeling: Generating Text lesson.

In this lesson, you will explore Soekia, a simplified text generation tool designed for student learning. Soekia offers us one example of Natural Language Processing.

-

Open a browser window and make sure it is filling the full width of your screen.

-

The blue-colored panel occupying most of your screen is where text generation takes place. This is the level typically visible to the user when using a chatbot.

-

We’re going to tell Soekia to generate a fairy tale by clicking on the

.

. -

Follow along and read the text until Soekia finishes writing.

-

Then complete the first section of Meet Soekia!.

-

What do you Notice?

-

A new word appears about once a second.

-

Different words appear in different colors.

-

The fairy tale sounds a little strange.

-

We can pause, speed up or just add one word at a time using the play controls beneath the text window.

-

We can ask Soekia to write another paragraph by clicking "

Resume Automatically".

Resume Automatically". -

We can manually select the next word from a list if we want.

-

In order to write a new story, we can click on the reset icon

to delete what’s been generated.

to delete what’s been generated. -

We can copy the text that’s been generated to our clipboard using the

.

. -

We can turn color-coding of the words on and off by clicking on

.

. -

We can switch from continuous text generation to "

single word" or "

single word" or " fast" text generation mode

fast" text generation mode  .

.

-

What do you Wonder?

-

Why does the story sound so odd?

-

Will Soekia tell a different story each time I ask it for a story?

-

Does ChatGPT use the same algorithm as Soekia?

-

Why are the words highlighted with different colors?

-

Hover your mouse over one or two of the highlighted words in the fairy tale. What appears?

-

If the words in your fairy tale aren’t already highlighted with different colors (greens, purples, etc), click the

beneath the generated text.

beneath the generated text.

-

-

Each color corresponds with a different document; documents are labelled A, B, C, etc.

Investigate

It’s time to peek behind the curtain to see where the fairytale you read is coming from!

-

Click the "LOOK INSIDE

" button in the upper right-hand corner of Soekia.

" button in the upper right-hand corner of Soekia.

Now, in addition to the blue text generation panel, we see three additional panels: suggested words, n-grams, and documents.

There is a LOT to discover in each panel!

-

Let’s start with the Documents panel.

-

Turn to Meet Soekia! and complete the page with your partner.

-

What did you Notice about the Documents panel?

-

There are 12 documents.

-

Each document is a different color.

-

The colors correspond with the colors that appeared (highlighting words) in the Text generation panel.

-

If I click on the icons in the upper right, I can change the training corpus.

-

If I change the prompt in the Text generation panel, the green lines at the bottom of the document change. These lines indicate how closely the wording of the prompt corresponds with the text in the document.

-

What did you Wonder about the Documents panel?

-

Can I change these documents for different ones?

-

Why haven’t I heard of some of these fairy tales?

-

What is the relationship between this Documents panel and the N-grams panel next to it?

Invite students to share their discoveries. Some of your students may discover that they can change the training corpus so that the documents include not fairy tales but tic tac toe games, musical compositions, and chess matches! In the subsequent section of the lesson, students will explore these other document collections in greater depth.

-

Before we start exploring the other panels, we’ll want to find a smaller and shorter collection of documents. Go to the collections

drop down menu and select

drop down menu and select  Intelligent Monkeys?

Intelligent Monkeys? -

Once you have the collection open, take a look at the N-grams panel. By default you should see

3selected at the top, meaning that all of the possible trigrams in the collection will be displayed, sorted from most to least frequently occurring. -

Turn to Meet Soekia! (n-grams) and complete the page with your partner.

-

If you finish before we’re ready to discuss as a class, see if you can predict the first 5 words Soekia would produce from the weather collection

. Note: When you switch collections, the temperature will automatically reset to 60, so you’ll need to reset it to low.

. Note: When you switch collections, the temperature will automatically reset to 60, so you’ll need to reset it to low.

You were just introduced to how Soekia scans the various lists in the N-grams panel to generate the next word in the text field when the temperature is set to low. You were also introduced to the idea that not all of the lists are "valid n-grams" from the get go. Let’s confirm that the process made sense.

Question 7 requires students to notice that all of the documents are one sentence long. As an extension, invite students to edit one of the documents so that it has a second sentence. Students should observe that editing just one document will result in Soekia producing a bigram that starts with a period!

-

When predicting the next word, when will Soekia choose a word from the list of unigrams?

-

For the first word. And for any other word, when it doesn’t find a match for a bigger n-gram that uses the previous words.

-

If the first four words in the text generation field were "Once upon a time", what would Soekia look for in the N-grams tabs to choose the next word?

-

First it would look for the most frequently occurring 5-gram that starts with "Once upon a time"

-

If there wasn’t one, it would look for the most frequently occurring 4-gram that starts with "upon a time"

-

If there wasn’t one, it would look for the most frequently occurring trigram that starts with "a time"

-

If there wasn’t one, it would look for the most frequently occurring bigram thar starts with "time"

-

If there wasn’t one, it would look for the next most frequently occurring unigram.

-

What else did you Notice about the N-grams panel?

-

All of the n-grams come directly from the documents.

-

I can sort the n-grams either alphabetically or by frequency.

-

Soekia interprets punctuation marks as words.

-

I can tell Soekia to produce unigrams, digrams, trigrams, etc.

-

Soekia computes the frequency of each n-gram, just like we did from the "There Was an Old Lady Who Swallowed a Fly" corpus in the Statistical Language Modeling: Generating Text lesson.

-

What did you Wonder about the N-grams panel?

-

Why does Soekia interpret punctuation as a word?

-

How does Soekia decide which n-gram it will use in the fairy tale?

We just looked at how Soekia chooses the next word with the temperature set to low, but what does that mean?

When the temperature is zero we are following an algorithm that very rigidly returns the most frequently occuring n-grams in the corpus. Higher temperatures introduce some degree of randomization. In fact, when the temperature is 60 it means there’s a 60% chance that we will look outside the first set (the most frequently occurring n-grams).

But why would we want to introduce randomness into our text generation? There are many reasons!

-

When we asked Soekia to generate text with zero randomization from the

Intelligent Monkeys? collection, sticking to the most frequent n-grams trapped us in a repetitive three word loop!

Intelligent Monkeys? collection, sticking to the most frequent n-grams trapped us in a repetitive three word loop! -

We might not want the same prompt to generate the exact same content every time.

-

We might want to generate text that pushes us to think creatively beyond the bounds of the box our ideas are stuck in.

AI "Hallucinations"

As generative AI produces text, it often generates incorrect or misleading information. This is commonly known as an AI "hallucination".

Some experts dislike this term and are encouraging an end to its use. These experts argue that all output is "hallucinatory". Some of it happens to match reality… and some does not.

The very same process that generates "hallucinatory" text also generates the "non-hallucinatory" text. This truth helps us to understand why it is so difficult to fix the "hallucination" problem.

This term also attributes intent and consciousness to the AI, giving it human qualities when it is merely executing a program exactly as it is intended to do.

Do you think your students might enjoy learning more about specific examples of AI hallucinations? If so, print and distribute the optional handout Famous AI Disasters.

-

Return to Soekia and open the

weather collection.

weather collection. -

In the Suggested words panel, click "Customize temperature/ number of suggestions" and set the temperature to low. Then click

in the Generate Text panel and watch as the text is generated both there and in the Suggested words panel.

in the Generate Text panel and watch as the text is generated both there and in the Suggested words panel. -

Repeat the process for other temperature settings, noticing how the language changes.

-

What did you Notice?

-

The Suggested words panel updates automatically as Soekia generates text.

-

Soekia often suggests a 5-gram and the shorter n-grams that are contained within that 5-gram (e.g., "the wind in the trees", "wind in the trees", "in the trees" and "trees").

Synthesize

-

Describe in your own words what happens in each of Soekia’s inner panels.

-

The Documents panel contains the training corpus.

-

Soekia processes the documents and produces a list of all possible n-grams (for a given n) in the N-grams panel.

-

In the Suggested words panel, Soekia offers possible completions for different inputs.

-

The user can set the temperature to choose word suggestions that occur frequently (low temperature) or to suggest words more randomly (high temperature).

-

In the Text Generation panel, the output appears automatically or the user can opt to select each word from a a list of suggestions.

-

Supervised learning includes three steps: the demonstration of the learning process, function abstraction, and using the function. Describe what each step includes for the supervised learning of a statistical language model.

-

Demonstration: For statistical language models, the demonstration phase is less obvious than in the other cases we have studied (self-driving cars and decision trees). Essentially, a human supervisor is needed to select the documents that form the training corpus. The demonstration that “given an n-gram, the completion is __” is implicit, not overt. It would be mindbogglingly overwhelming for a human to have to demonstrate each of these completions! Instead the "supervision" comes from the probabilities the computer has calculated from the corpus.

-

Function abstraction: A statistical language model assigns probabilities to different word sequences, indicating how likely it is that they will occur based on the preceding words (or n-grams).

-

Use: Function use involves generating text, one word at a time, based on probability.

🔗What Makes a Language?

Overview

Students discover that statistical language models do not require natural languages to function.

Launch

Let’s take a break from Soekia for a quick game of Tic Tac Toe!

-

Turn to the first section of Tic Tac Toe and play a game of Tic Tac Toe with your neighbor. If you need a refresher on how to play the game, you’ll find directions on the page.

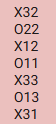

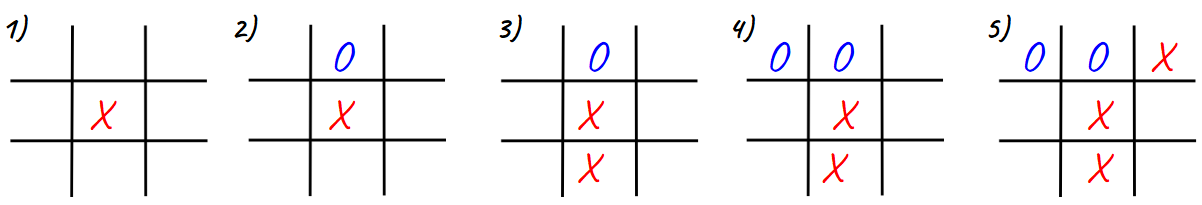

In order to communicate with Soekia about tic tac toe games, we’ll need to record the moves using an annotation.

-

Let’s think of the 3x3 Tic Tac Toe grid as a first quadrant coordinate plane with the origin (0,0) in the bottom left corner

-

For each move, our notation must indicate:

-

the player whose turn it is (X or O)

-

the ordered pair (x, y) for the location of the player’s move on that turn

-

-

If player X makes a move in the bottom right corner, we would describe that turn as: X31

-

If player O makes a move in the middle of the left column, we would describe that turn as: O12

-

Complete the second section of Tic Tac Toe.

-

Move on to the third section of Tic Tac Toe and work with your partner to annotate the 5-turn sequence that is drawn for you.

-

How did you annotate the moves in this Tic Tac Toe game?

-

X22, O23, X12, O13, X33

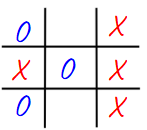

Turn to the final section of Tic Tac Toe and work with your partner to translate the "document" written in our Tic Tac Toe notation into a standard game on a Tic Tac Toe board.

Here is the game played out on a Tic Tac Toe board:

|

|

-

Is there a winner?

-

Yes! X wins the game.

Investigate

Did you notice that the collection of fairy tales you explored during the first half of this lesson is just one of several available training corpuses? Let’s explore some of the others.

-

Open a browser window and make sure it is filling the full width of your screen.

-

Follow the directions on What Makes a Language? to load the Tic Tac Toe training corpus in Soekia.

-

Complete the first section of What Makes a Language?

Soekia is a great tool for allowing us to look behind the curtain and to watch Natural Language Processing at work.

Interestingly — as the Tic Tac Toe corpus reveals — Natural Language Processing does not actually require a natural language! (A natural language is a language used by humans, like Spanish, English or Swahili.)

Just like a natural language, the Tic Tac Toe text can be parsed into n-grams and then the likelihood of each n-gram’s appearance can be determined, so Soekia was able to apply the same algorithms used on our fairytale corpus to produce output.

-

Can you think of any other artificial languages that Soekia might be able to process?

-

Possible examples: chess moves, musical notation

-

What is required of an artificial language, in order for it to successfully undergo natural language processing?

-

It must be broken up with spaces so that it can be interpreted as "words", even if it is not made up of actual words.

-

Follow the directions in the second section of What Makes a Language? to access the "Music in ABC Notation" training corpus.

-

Complete the second section of What Makes a Language?, "Thinking About Natural Language Processing."

-

Does Natural Language Processing require natural language? Explain.

-

No, Natural Language Processing works on artificial languages, such as chess and music notation. As long as the language can be broken into "words", then the text can be processed just like a natural language. The very same algorithms can be applied to a wide variety of languages — both natural and artificial.

Synthesize

-

A student argues that ChatGPT — which was built on the concept of language modeling — is a reliably correct and credible source of information. How would you respond?

-

The output that ChatGPT produces depends on the corpus on which it is trained.

-

ChatGPT does not actually have any way of assessing for correctness and credibility; it simply produces one output after the next based on a model.

-

The very same process that generates so-called "hallucinatory" text also generates the "non-hallucinatory" text.

-

The student arguing that ChatGPT is a reliable source of information needs to understand ChatGPT’s output sometimes happens to match reality… but sometimes it does not!

These materials were developed partly through support of the National Science Foundation, (awards 1042210, 1535276, 1648684, 1738598, 2031479, and 1501927).  Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.

Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.