(Using another tool? Please select it now: Pyret.)

Students consider possible threats to the validity of their analysis. This lesson optionally includes the Project: When Data Science Goes Bad🎨.

Lesson Goals |

Students will be able to…

|

Student-facing Lesson Goals |

|

Materials |

*This lesson is unplugged* and does not require a computer.

|

Supplemental Materials |

|

Classroom Visual |

|

Preparation |

|

Optional Project |

|

🔗Threats to Validity

Overview

Students are introduced to the concept of validity, and a number of possible threats that might make an analysis invalid.

Launch

As good Data Scientists, the staff at the animal shelter are constantly gathering data about their animals, their volunteers, and the people who come to visit.

But just because they have data doesn’t mean the conclusions they draw from it are correct!

Suppose the shelter staff surveyed 1,000 cat-owners and found that 95% of them thought cats were the best pet.

Could they really claim that people generally prefer cats to dogs?

There’s more to data analysis than simply collecting data and crunching numbers.

In the example of the cat-owning survey, the claim that “people prefer cats to dogs” is invalid because the data itself wasn’t representative of the whole population (of course cat-owners are partial to cats!).

Data Scientists have several major Threats to Validity to worry about:

1) Selection bias — Data was gathered from a sample that is not representative of the population.

-

This is the problem with surveying cat owners to find out which animal is most loved!

2) Bias in the study design — Data was gathered in such a way that it influenced the results, for example, researchers might:

-

Phrasing a question to manipulate people’s answers. For example: “Since annual vet care comes to about $300 for dogs and only about half of that for cats, would you say that owning a cat is less of a burden than owning a dog?” This could easily lead to a misrepresentation of people’s true opinions.

-

Ask a series of questions in a way that changes the way respondents might answer the last question. Intentionally asking questions about controversial topics at the beginning might get respondents angry, which could influence the way they answer a question at the end.

-

Judge other cultures on the standards of their own culture rather than the standards of the one being studied. This is known as "culture bias".

3) Poor choice of summary data — Even if the selection is unbiased, sometimes outliers are so extreme that they make the mean completely useless at best — and misleading at worst. For example:

-

If you’re trying to get a sense of the wealth of a typical family in a community, averaging in the wealth of a few billionaires will skew the results.

4) Confounding variables — Sometimes there’s an unaccounted for variable that is lurking in the background, influencing both of the variables we are studying and confusing the relationship between them. For example:

-

A study might find that cat owners are more likely to use public transportation than dog owners. But it’s not that owning a cat means you drive less: people who live in big cities are more likely to use public transportation, and also more likely to own cats.

More examples of confounding variables can be found in the correlations lesson: Correlation Does Not Imply Causation!.

And there are many other threats to validity out there!

Investigate

-

On Identifying Threats to Validity and Identifying Threats to Validity (2), you’ll find four different claims backed by four different datasets.

-

Each one of those claims suffers from a serious threat to validity.

-

Work with your partner to identify each of the four threats.

-

Respond to Selection Bias or Biased Study?

Life is messy, and there are always threats to validity.

Data Science is about doing the best you can to minimize those threats, and to be upfront about what they are whenever you publish a finding.

When you do your own analysis, make sure you include a discussion of possible threats to validity!

Synthesize

Why is it important to consider potential threats to validity?

🔗Fake News and the Misuse of Statistics

Overview

Students are asked to consider the ways in which statistics are misused in popular culture, and become critical consumers of some statistical claims. Finally, they are given the opportunity to misuse their own statistics, to better understand how someone might distort data for their own ends.

Launch

You have already seen a number of ways that statistics can be misused:

1) Using the wrong measure of center with heavily-skewed data

2) Using a correlation to imply causation

3) Incorrect Interpretations of a visualization, which try to trick people who don’t know how to read charts and graphs. For example:

-

A reporter telling us that the r-value in linear regression is telling us "the percent chance" of something happening.

-

A politician telling us that the tallest bar in a bar chart makes up the largest percentage of the whole sample.

-

An advertisement telling us that the tallest bar in a histogram makes up the largest percentage of the whole sample.

There are many other ways to mislead the audience, including:

4) Intentionally using the wrong chart — Suppose someone was asked to prepare a report on the demographics of the people holding positions of power in their city government. If the city had a significant Black population, and no Black elected officials, it should be cause for further investigation. But, if someone were trying to avoid addressing the issue, they might opt to display a pie chart (hiding that lack of representation) instead of displaying a bar chart (that would show an empty bar) in hopes that nobody would even notice the issue! Note: Pie charts could be used responsibly for this same scenario if a pie chart displaying the demographics of the city’s population was presented alongside a pie chart of the demographics of the city’s elected officials!

5) Changing the scale of a chart — Changing the y-axis of a scatter plot can make the slope of the regression line seem smaller ("look, that line is basically flat anyway!") or larger ("look how quickly things have changed!").

With all the news being shared through newspapers, television, radio, and social media, it’s important to be critical consumers of information!

Investigate

-

On Fake News, you’ll find some deliberately misleading claims made by slimy Data Scientists.

-

Identify why each of these claims should not be trusted.

-

-

Once you’ve finished, make a copy of Lies, Darned Lies (Google Doc).

-

Come up with four misleading claims based on data or visualizations from your dataset.

-

Fit it on one page, print and trade with another group. See if you can figure out why each other’s claims are not to be trusted!

-

-

If you want more practice debunking Fake News, complete Fake News (2).

-

What "lies" did you tell?

-

Was anyone able to stump the other group?

Synthesize

-

Where have you seen statistics misused in the real world?

-

Over the next several weeks, keep your eyes peeled for misused statistics and bring the examples you find to class to share!

🔗Dealing with Outliers

Overview

Students are confronted with the concept of outliers: data points that stray far from the rest of the data and appear to confound observed patterns and groupings. Data Scientists take the decision of whether or not to keep outliers very seriously, as there can be profound implications for validity.

Launch

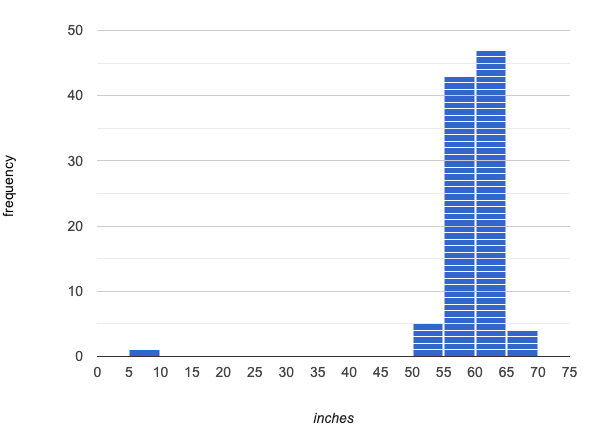

Suppose we survey the heights of 12 year olds, and almost all values are clustered between 50-70in. There’s a very low outlier, however, at 6in.

Suppose we survey the heights of 12 year olds, and almost all values are clustered between 50-70in. There’s a very low outlier, however, at 6in.

-

Is there really a 12 year old who is 6 inches tall?

-

Probably not! This is almost certainly junk data from a typo (maybe someone meant to type "60" instead of "6"?).

This typo could throw off our analysis completely! This one data point will destroy the mean, forcing us to use a different measure of center even if the rest of the data is symmetric.

"Junk" data is harmful, because it can drastically change our results! If we blindly keep every outlier, it can become a serious threat to the validity of our analysis!

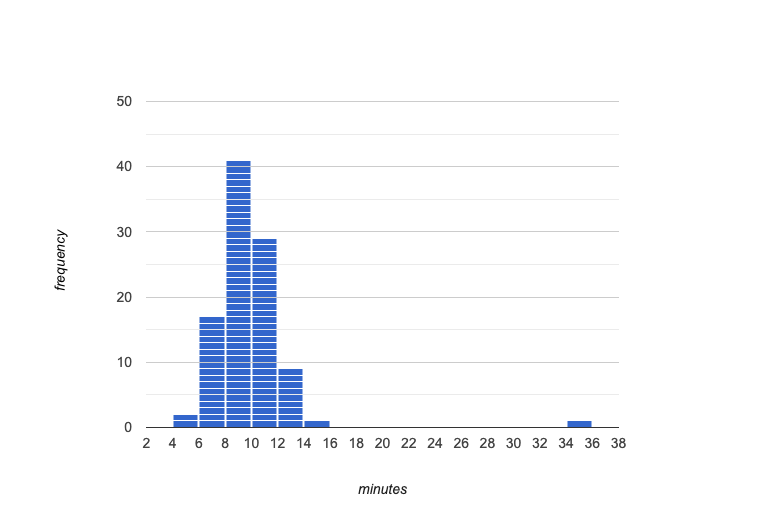

Suppose we survey the number of minutes it takes for fans to find their seats at a stadium, and almost all values are clustered between 4-16 minutes. There’s a very high outlier, however, at 35 minutes.

Suppose we survey the number of minutes it takes for fans to find their seats at a stadium, and almost all values are clustered between 4-16 minutes. There’s a very high outlier, however, at 35 minutes.

-

Did it really take someone 35 minutes to find their seat?

-

It’s very possible! Maybe it’s someone who takes a long time getting up stairs, or someone who had to go far out of their way to use the wheelchair ramp!

If we choose to remove or keep an outlier without thinking carefully, it can become a serious threat to the validity of our analysis!

Investigate

Outliers… do they stay or do they go?

As a data scientist, an outlier is always a reason to look closer. And whether you decide to keep or remove it from your dataset, make sure you explain your reasons in your write-up!

With your partner, complete Outliers: Should they Stay or Should they Go?.

These points are called unusual observations. Unusual observations in a scatter plot are like outliers in a histogram or dot plot, but more complicated because it’s the combination of x and y values that makes them stand apart from the rest of the cloud.

Unusual observations are always worth thinking about!

Sometimes unusual observations are just random. Felix seems to have been adopted quickly, considering how much he weighs. Maybe he just met the right family early, or maybe we find out he lives nearby, got lost and his family came to get him. In that case, we might need to do some deep thinking about whether or not it’s appropriate to remove him from our dataset.

Sometimes unusual observations can give you a deeper insight into your data. Maybe Felix is a special, popular (and heavy!) breed of cat, and we discover that our dataset is missing an important column for breed!

Sometimes unusual observations are the points we are looking for! What if we wanted to know which restaurants are a good value, and which are rip-offs? We could make a scatter plot of restaurant reviews vs. prices, and look for an observation that’s high above the rest of the points. That would be a restaurant whose reviews are unusually good for the price. An observation way below the cloud would be a really bad deal.

Synthesize

The school cafeteria surveyed 100 students about their favorite foods, and most chose things like pizza, spaghetti, Caesar salad, etc. But two students chose foods that no one else heard of!

-

What are some reasons why these outliers should stay?

-

These students might have important dietary restrictions that need to be taken into consideration!

-

What are some reasons why these outliers should go?

-

What if those foods aren’t real, and the two students were just messing around?

-

If Data Scientists are the ones deciding whether an outlier is important or irrelevant, why does it matter who those Data Scientists are?

-

A Data Scientist might be biased for or against a specific group or idea, and be more likely discard outliers they don’t or keep those they do like.

-

A Data Scientist might simply be unfamiliar with the domain of the data they’re analyzing, and not realize whether an outlier is important and needs to be kept!

This is a great opportunity to remind students that Computing Needs All Voices!

🔗Additional Exercises

These materials were developed partly through support of the National Science Foundation, (awards 1042210, 1535276, 1648684, 1738598, 2031479, and 1501927).  Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.

Bootstrap by the Bootstrap Community is licensed under a Creative Commons 4.0 Unported License. This license does not grant permission to run training or professional development. Offering training or professional development with materials substantially derived from Bootstrap must be approved in writing by a Bootstrap Director. Permissions beyond the scope of this license, such as to run training, may be available by contacting contact@BootstrapWorld.org.