You can read our other entries on the subject: UI, Describing Images, The Definitions Area, and User Testing

Today, we're announcing a beta release of WeScheme that adds full compatibility with screen readers. Fire up NVDA or JAWS and try it now!When we say "Bootstrap is for all students", we mean it. Any time CS is required - whether in the form of a mandatory course or when integrated into a required math class - the bar for software accessibility becomes much higher. We take our goals seriously, and that's why we've begun a significant overhaul of our software to make it accessible to students with disabilities. To be clear: this is a journey we have only begun, and there is a lot more work to be done before Bootstrap is fully-accessible to all students! In this blog post (and the ones to follow), We'll share some of our efforts thus far, the problems we've faced, and the approaches we've taken to addressing them. We'll also highlight some of the challenges that we're still working on, in the hopes that others will find them helpful.

This initiative is made possible by funding from the National Science Foundation and the ESA Foundation. We'd also like to extend a huge thank-you to Sina Bahram, for his incredible contributions, support and patience during this project.

The first step in making the UI accessible is to annotate these sections so that screen readers can identify and describe them appropriately. The annotation system for this is called ARIA, which is a detailed specification for how web developers can express their UI for use with screen readers. Our UI is divided into three regions: (1) the Toolbar, (2) the Interactions Area, and (3) the Definitions Area. Each of these sections are now annotated using ARIA labels, to make it easy to quickly hop from one to another. The Toolbar was improved by ensuring that each button or field was appropriately labeled: each control announces itself with a descriptive name, and the appropriate keyboard shortcut.

The first step in making the UI accessible is to annotate these sections so that screen readers can identify and describe them appropriately. The annotation system for this is called ARIA, which is a detailed specification for how web developers can express their UI for use with screen readers. Our UI is divided into three regions: (1) the Toolbar, (2) the Interactions Area, and (3) the Definitions Area. Each of these sections are now annotated using ARIA labels, to make it easy to quickly hop from one to another. The Toolbar was improved by ensuring that each button or field was appropriately labeled: each control announces itself with a descriptive name, and the appropriate keyboard shortcut.

Supporting visually-impaired users also requires that we prioritize a number of different modalities for interaction, which changed our functionality in subtle ways. Blind users, for example, rely far more heavily on keyboard shortcuts. In all major browsers, the F5 key is used to reload a web page. Most sighted users may not even know this, but even if they did it wouldn't be jarring to discover that F5 had been re-mapped to something else. For blind users, however, this is kind of keyboard interface decision is problematic: what other standard keys don't behave as they should? What other assumptions have been violated? This necessitated a complete re-mapping of some of our keyboard shortcuts, to ensure that we conform with browser and screen-reader conventions.

Visually-impaired users also make use of numerous navigation aids, which go far beyond the Tab-order "carousel of elements". Beyond simple "next element" navigation, screen-readers provide a notion of a "search cursor", which is controlled with arrow keys and can be used to navigate the UI spatially. They can also present the user with a list of "landmarks", which exist in both tab-order and spatial-order modalities. These landmarks must be explicitly declared by the UI to be used by the screen reader. We adopted Chatzilla's F6-Carousel approach, allowing the user to hit F6 (or Shift-F6) to rotate focus between the Toolbar, Definitions, and Interactions regions.

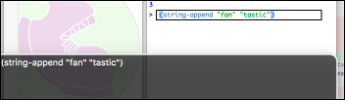

The Interactions Area is a standard REPL: the user enters a program on one line and the computer replies with an evaluated result (or an error message) on the next line. A series of program-evaluation pairs becomes a conversation between the student and the computer. Programs typed in the REPL are typically quite short, and the Interactions Area is used for student experimentation and exploration. For short programs, having a screen reader announce each symbol, keyword or string as the user types is acceptable. The REPL "conversation" needed to be annotated as well, in order to be navigable.

The Interactions Area is a standard REPL: the user enters a program on one line and the computer replies with an evaluated result (or an error message) on the next line. A series of program-evaluation pairs becomes a conversation between the student and the computer. Programs typed in the REPL are typically quite short, and the Interactions Area is used for student experimentation and exploration. For short programs, having a screen reader announce each symbol, keyword or string as the user types is acceptable. The REPL "conversation" needed to be annotated as well, in order to be navigable.

Again, we borrow from the conventions established by Chatzilla: we use history shortcuts like Alt-1, Alt-2, etc. have the browser read back previous program-evaluation pairs, and Alt-Up and Alt-Down to page back and forth through previously-evaluated programs. All of this makes it easy to enter code into the Interactions Area. Output, however, is an entirely different story.

First, the easy stuff: we can use ARIA alerts to announce the new content. This even works for error messages, allowing the computer to read the error aloud to the student. But what about simple output like 3, "3", or "three"? A standard screenreader will read all of those the same way, despite the fact that they are VERY different values. And what about more interesting output, like data lists, vectors, hashtables, functions, and images? How do we read these?

First, the easy stuff: we can use ARIA alerts to announce the new content. This even works for error messages, allowing the computer to read the error aloud to the student. But what about simple output like 3, "3", or "three"? A standard screenreader will read all of those the same way, despite the fact that they are VERY different values. And what about more interesting output, like data lists, vectors, hashtables, functions, and images? How do we read these?

Making an environment truly accessible means thinking through difficult cases like these. Solving these issues required that we build in accessibility features at the runtime level, so that every possible datatype is tagged with the appropriate aria-label, and those labels bubble up to the IDE to be read by the screen reader. Try evaluating #3(true "hello" 4), and see what happens! This is only doable we roll our own compilers, languages and runtimes -- this wouldn't even be possible if we were using JavaScript and the built-in browser runtime. But what about images? Many programming assignments involve graphical output, and while an accessible curriculum should always offer alternatives it should never prevent a blind student from doing any such assignments.

Handling images is a fascinating challenge. How should our environment describe the color of an image? What about arbitrary photos and drawings? We'll share our solution for these problems in our next post. Stay tuned!

Posted December 31st, 2016